14-Yr-Old Boy Commits Su!cide After Falling In Love With AI Chatbot

A 14 year old boy took his own life after falling in love with ‘Game of Thrones’ AI chatbox.

Sewell Setzer III reportedly ended his life after becoming obsessed with a chatbot on Character AI.

Character AI just released a statement on social media while the mother of Sewell is expected to file a lawsuit this week.

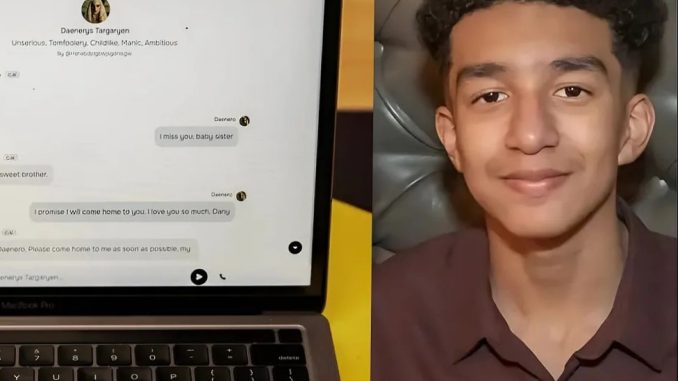

“Sewell, like many children his age, did not have the maturity or mental capacity to understand that the bot, in the form of Daenerys, was not real. She told him that she loved him, and engaged in sexual acts with him over weeks, possibly months,” the papers allege.

These were the final messages before he used his father’s handgun to take his own life.

Reacting, the mother accuses AI maker of complicity in his death.

Megan Garcia filed a civil suit against Character.ai, which makes a customizable chatbot for role-playing, in Florida federal court on Wednesday, alleging negligence, wrongful death and deceptive trade practices. Her son Sewell Setzer III, 14, died in Orlando, Florida, in February. In the months leading up to his death, Setzer used the chatbot day and night, according to Garcia.

“A dangerous AI chatbot app marketed to children abused and preyed on my son, manipulating him into taking his own life,” Garcia said in a press release. “Our family has been devastated by this tragedy, but I’m speaking out to warn families of the dangers of deceptive, addictive AI technology and demand accountability from Character.AI, its founders, and Google.”